Bayesian Networks 3 - Maximum Likelihood - Stanford CS221: AI (Autumn 2019)

Description

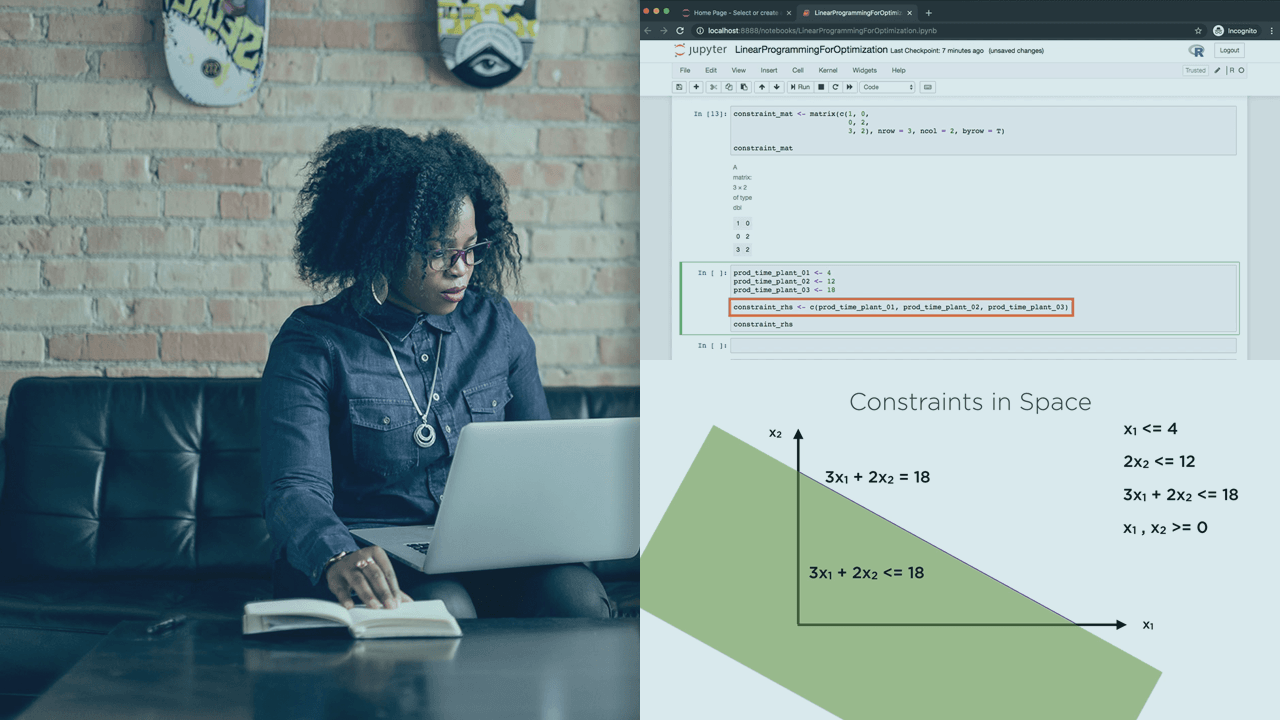

This course teaches learners how to apply Maximum Likelihood estimation in Bayesian Networks. The course covers topics such as where parameters come from, learning tasks, parameter sharing, Naive Bayes, HMMs, Laplace smoothing, Expectation Maximization, and more. The teaching method includes theoretical explanations, examples, and scenarios. This course is intended for individuals interested in advancing their knowledge of Bayesian Networks and AI.

Tags

Syllabus

Introduction.

Announcements.

Review: Bayesian network.

Review: probabilistic inference.

Where do parameters come from?.

Roadmap.

Learning task.

Example: one variable.

Example: v-structure.

Example: inverted-v structure.

Parameter sharing.

Example: Naive Bayes.

Example: HMMS.

General case: learning algorithm.

Maximum likelihood.

Scenario 2.

Regularization: Laplace smoothing.

Example: two variables.

Motivation.

Maximum marginal likelihood.

Expectation Maximization (EM).

Bayesian Networks 3 - Maximum Likelihood - Stanford CS221: AI (Autumn 2019)

-

TypeOnline Courses

-

ProviderYouTube

Introduction.

Announcements.

Review: Bayesian network.

Review: probabilistic inference.

Where do parameters come from?.

Roadmap.

Learning task.

Example: one variable.

Example: v-structure.

Example: inverted-v structure.

Parameter sharing.

Example: Naive Bayes.

Example: HMMS.

General case: learning algorithm.

Maximum likelihood.

Scenario 2.

Regularization: Laplace smoothing.

Example: two variables.

Motivation.

Maximum marginal likelihood.

Expectation Maximization (EM).

Tags

Related Courses

MathTrackX: Polynomials, Functions and Graphs

คณิตเศรษฐศาสตร์และเศรษฐมิติเพื่อการธุรกิจ | Mathematical Economics and Econometrics for Business

Differential Equations Part I Basic Theory

Çok değişkenli Fonksiyon II: Uygulamalar / Multivariable Calculus II: Applications

Calculus through Data & Modeling: Differentiation Rules

Linear Algebra I: Linear Equations

Cálculo Diferencial

Algèbre Linéaire (Partie 1)

Solving Problems with Numerical Methods

Math Fundamentals (2020)

Online Courses

Online Courses  YouTube

YouTube